This project aims to compare a neural network trained via backpropagation and one trained via reinforcement learning on their ability to control a car until it successfully completes a lap of a racetrack.

To achieve this, I have chosen to use the TensorFlow library in python to run the backpropagation algorithm. As the main application is written in C++, training data will be collected in the C++ application by having the user do three laps of the track and recording their inputs every update. It will then be saved to a csv file, loaded into the backpropagation python script, the algorithm will be run and the weights calculated will be saved to a separate csv file. The calculated weights will then be loaded back into the C++ application and the neural network will run using these weights.

For the Reinforcement learning algorithm, I will be using the Q-Learning algorithm integrated into a neural network (Deep Q Learning). In summary, this algorithm uses a reward to tell whether it should adjust its weights or not. In this project, the car earns a bigger reward the further it gets around the track and it gets heavily punished for colliding with the walls. When it receives a reward less than the last reward received, it adjusts its weights and resets to its start position to try again. The weights it has calculated can be saved at any time to be loaded later.

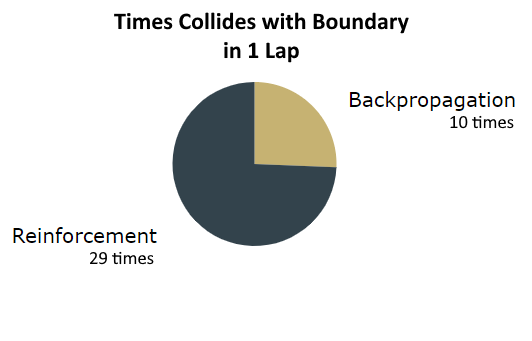

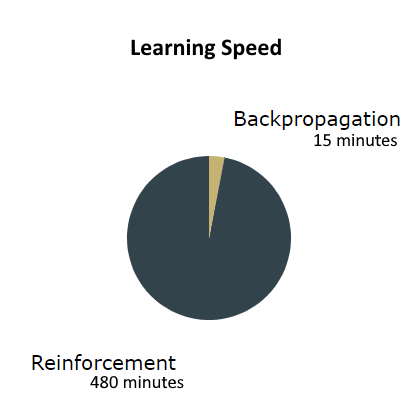

Once both algorithms have calculated their weights, they are both loaded and compared in the C++ application. Below I have detailed my findings by comparing both algorithms under different topics such as time taken to implement, accuracy of calculated weights and learning speed. To observe these results, I ran both neural networks side by side and observed their behaviour.