A Comparison of Backpropagation and Reinforcement Learning as Training Mechanisms for an Artificial Neural Network to Play a Computer Game.

Project Abstract

The purpose of my project is to investigate and compare the advantages and disadvantages of two different training mechanisms of Artificial Neural Networks (ANN) in the context of interacting with a game world: Backpropagation and Reinforcement Learning respectively.

Work Done

Following is a link to the source code and documentation of this investigation:

https://github.com/Richard-Fleming/FYP

Research Question and Background

I’ve chosen to investigate and compare two training methods for artificial neural networks within the context of a game: Backpropagation and Reinforcement Learning respectively. What sparked this interest was the AI of the Alien in Alien: Isolation. Throughout the game, the developers managed to simulate the feeling of the Alien getting smarter and learning the players playstyle. In reality, this was achieved using 2 different AI and the Alien’s own behaviour tree which was unlocked as the player made their way through the game.

But that made me begin to think about the uses of ANNs in gamesdevelopment in general. For example, one could train an ANN with the purposes of playtesting, generating a model that mimics players in oder to spot any bugs or problems a player could encounter. Going back to gameplay, what about creating an anemy that runs off of an ANN that learns and improves as the game goes on. What kind of gameplay experiences could someone craft for the player with an enemy that adjusts to the players choice of playstyle, and what choices could the player make to navigate this. to that end, I chose to investigate two methods of training an ANN and weighting the pros and cons of the two methods to try and see which of the two would be better suited for interacting with a game environment.

Experiments Used

During my investigation, I ran and trained the ANN Models through a randomly generated obstacle course where the ANN would choose the appropriate action based on the incoming obstacle. This was all done with the goal of tracking and identifying a number of key factors regarding the use of each training method:

- Time and recources needed to train.

- Accuracy of choices.

- Similarity to human players.

In the case of point one, Backpropagation requires input and data beforehand to train the model off of, while Reinforcement Learning requires a great number of cycles to learn from the environment, and depending on the method of learning, may require large numbers of Q tables using normal Q learning methods.

The second point is more in regards to how long the ANN models can survive and make the correct choices in each situation. When given as much of an equal chance as possible, which of the two methods allows the ANN to progress further.

Finally the last point is more sibjective, regarding how closely does the ANN resemble a human player as they progress through the game.

Results

from my experiments using backpropagtaion and a Q learning method, I found that while the backpropagation method provide consistant and reliable outputs and data, it also was subject to bias; since all of it’s input data came from me, it would develop the same habits as I do and tackle the obstacles in a similar manner.

On the other hand, the Q learning method allowed for emergent gameplay to occur as the ANN tackled the obstacles in it own way, often developing it’s own habits, but at the same time could provide unreliable data, and was prone to developiong bad habits that would hinder it.

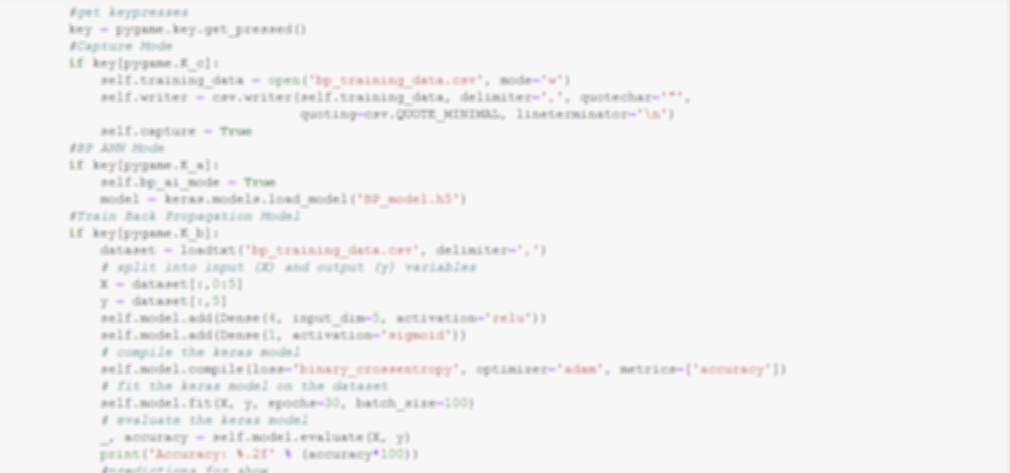

In regards to time and resources, backpropagation required little time and effort to provide training data for on account of the small number of inputs and situations in my game. the result was a csv containing the position and sizes of the obstacles encountered as well as my inputs in response, which I then used to generate a model in an h5 file. Reinforcemtn Learning meanwhile, as a result of choosing Q learning, I had to create 16 reward tables based on each situation the ANN would find itself in, and a Q table in the ofrm of a csv that I could load and use later.

I felt that the backpropagation method provided gameplay that closer resembled a human, but the Q learning method allowed for emergant gameplay and new solutions to problems.

Conclusions

After my experiments, I found that backpropagation provided gameplay that was more stable and consistant, but biased as a result of being trained solely by my inputs. On the other hand, while reinforcement learning allowed for emergant gameplay and unexpected way of tackling obstacles, it could also run the risk of developing bad habits and unstable gameplay that could only be ironed out with time and more iterations.

To answer the question of which methods to use, I believe that the answer lies somewhere in between. Neither method truly proved better than the other, and I feel that each method is simply better suited to different situations. Backpropagation could be a powerful tool for playtesting, as it can allow for the recreation of bugs or glitches consistantly using player input, while the emergant gameplay og reinforecemtn learning may lend itself to crefting unique experiences for players.

One prospect I find interesting would be combining the two methods. for example, when creting an enemy, backpropagation could provide a stable and steady base for reinforcement learning to venture off of without losing consistency. One thing is certain though; both methods can lead to new and interesting developents in games, and games development.

References

Sutton and Barto "Reinforcement Learning" (2nd Edition) - 2020

Russell and Norvig "Artificial Intelligence - A Modern Approach" (3rd Edition) - 2010